Follow Kubernetes the Hard way

This article was written by just following Kelsey Hightower’s Kubernetes Hardway document to understand Kubernetes internal architecture.

Overview

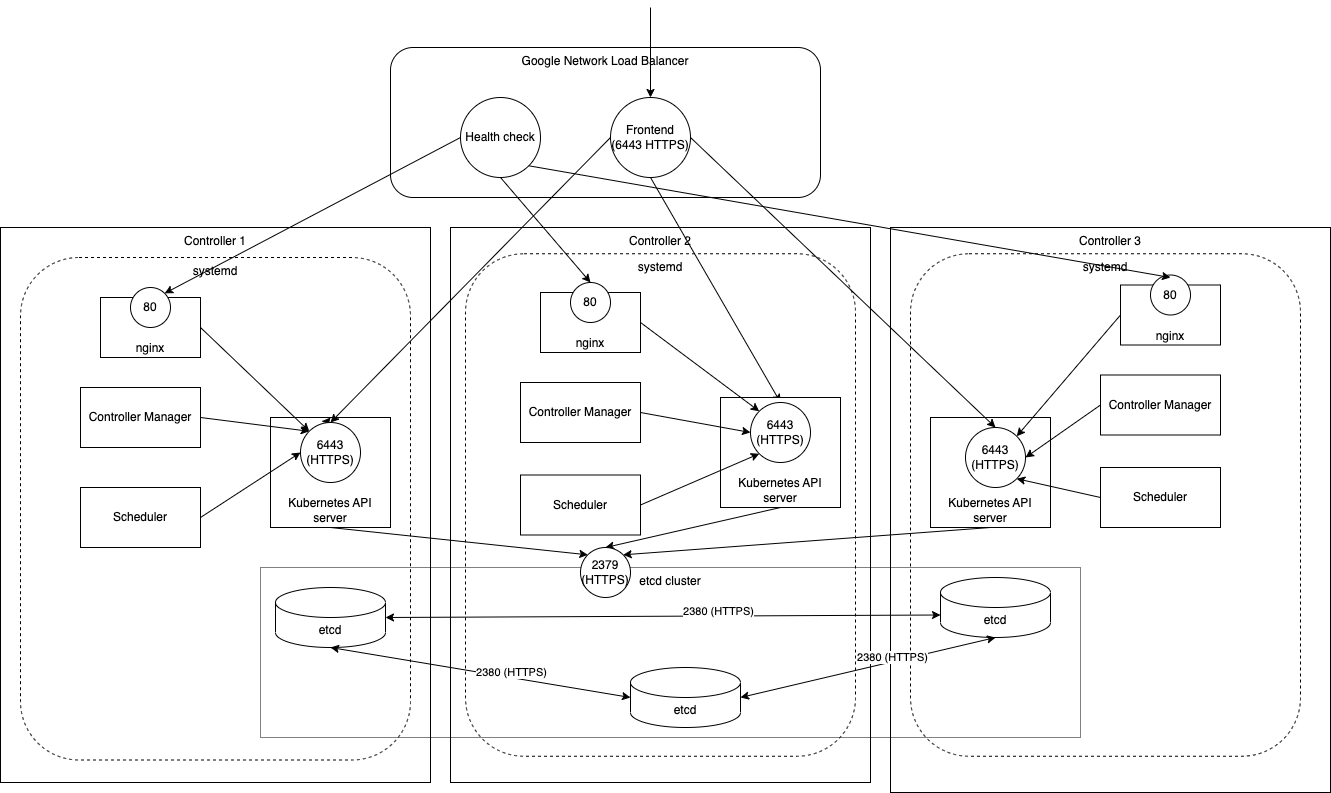

Control plane

The control plane’s overview:

Each node on the control plane:

- etcd: Store cluster states

- 2379: Client API port

- 2380: Peer API port

- Kubernetes API Server

- 6443

- Scheduler

- Controller Manager

- nginx

- For HTTP heath check from GCP Load Balancer

Each middleware was installed by systemd.

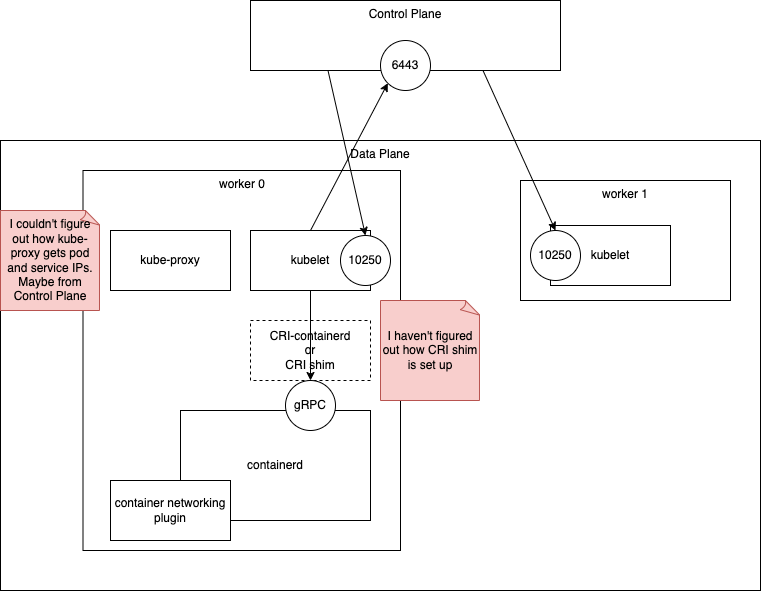

Data plane

- containerd

- start from systemd

- container networking plugin

- See this article or this article for the overview of CNI

- bridge network configuration

- kubelet

- start from systemd

- kube-proxy

- start from systemd

- iptables mode

- Coredns

- In kubernetes

- CLIs

- runc

- crictl

- kubectl

Networking

- Pod to Pod: Use iptables by kube-proxy.

- But I can’t find iptable rules to route traffic for a k8s service

- Node to Node networking: Use VPC Routes

2. Client tools

brew install cfssl> cfssl version

Version: 1.6.4

Runtime: go1.20.3

> cfssljson --version

Version: 1.6.4

Runtime: go1.20.33 Compute resources

Set up them by terraform. Change the compute resource names

- from worker-$n to k8s-worker-$n

- from controller-$n to k8s-controller-$n

4. Certificate authority

Certificate Authority

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "Kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "CA",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare caThe Admin Client Certificate

cat > admin-csr.json <<EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:masters",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare adminThe Kubelet Client Certificates

for instance in k8s-worker-0 k8s-worker-1 k8s-worker-2; do

cat > ${instance}-csr.json <<EOF

{

"CN": "system:node:${instance}",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:nodes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

EXTERNAL_IP=$(gcloud compute instances describe ${instance} \

--format 'value(networkInterfaces[0].accessConfigs[0].natIP)')

INTERNAL_IP=$(gcloud compute instances describe ${instance} \

--format 'value(networkInterfaces[0].networkIP)')

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=${instance},${EXTERNAL_IP},${INTERNAL_IP} \

-profile=kubernetes \

${instance}-csr.json | cfssljson -bare ${instance}

doneThe Controller Manager Client Certificate

cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:kube-controller-manager",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-controller-manager-csr.json | cfssljson -bare kube-controller-managerThe Kube Proxy Client Certificate

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:node-proxier",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare kube-proxyThe Scheduler Client Certificate

cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:kube-scheduler",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-scheduler-csr.json | cfssljson -bare kube-schedulerThe Kubernetes API Server Certificate

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

KUBERNETES_HOSTNAMES=kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.svc.cluster.local

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=10.32.0.1,10.240.0.10,10.240.0.11,10.240.0.12,${KUBERNETES_PUBLIC_ADDRESS},127.0.0.1,${KUBERNETES_HOSTNAMES} \

-profile=kubernetes \

kubernetes-csr.json | cfssljson -bare kubernetesThe Service Account Key Pair

cat > service-account-csr.json <<EOF

{

"CN": "service-accounts",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

service-account-csr.json | cfssljson -bare service-accountDistribute the Client and Server Certificates

for instance in k8s-worker-0 k8s-worker-1 k8s-worker-2; do

gcloud compute scp ca.pem ${instance}-key.pem ${instance}.pem ${instance}:~/

donefor instance in k8s-controller-0 k8s-controller-1 k8s-controller-2; do

gcloud compute scp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem ${instance}:~/

done5. Kubernetes configuration files

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')The kubelet Kubernetes Configuration File

for instance in k8s-worker-0 k8s-worker-1 k8s-worker-2; do

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \

--kubeconfig=${instance}.kubeconfig

kubectl config set-credentials system:node:${instance} \

--client-certificate=${instance}.pem \

--client-key=${instance}-key.pem \

--embed-certs=true \

--kubeconfig=${instance}.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:node:${instance} \

--kubeconfig=${instance}.kubeconfig

kubectl config use-context default --kubeconfig=${instance}.kubeconfig

doneThe kube-proxy Kubernetes Configuration File

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials system:kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfigThe kube-controller-manager Kubernetes Configuration File

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfigThe kube-scheduler Kubernetes Configuration File

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfigThe admin Kubernetes Configuration File

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=admin.kubeconfig

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=admin \

--kubeconfig=admin.kubeconfig

kubectl config use-context default --kubeconfig=admin.kubeconfigDistribute the Kubernetes Configuration Files

for instance in k8s-worker-0 k8s-worker-1 k8s-worker-2; do

gcloud compute scp ${instance}.kubeconfig kube-proxy.kubeconfig ${instance}:~/

done

for instance in k8s-controller-0 k8s-controller-1 k8s-controller-2; do

gcloud compute scp admin.kubeconfig kube-controller-manager.kubeconfig kube-scheduler.kubeconfig ${instance}:~/

done6. Generating the Data Encryption Config and Key

ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

cat > encryption-config.yaml <<EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF

for instance in k8s-controller-0 k8s-controller-1 k8s-controller-2; do

gcloud compute scp encryption-config.yaml ${instance}:~/

done7. Bootstrapping the etcd Cluster

wget -q --show-progress --https-only --timestamping "https://github.com/etcd-io/etcd/releases/download/v3.4.15/etcd-v3.4.15-linux-amd64.tar.gz"

tar -xvf etcd-v3.4.15-linux-amd64.tar.gz

sudo mv etcd-v3.4.15-linux-amd64/etcd* /usr/local/bin/

sudo mkdir -p /etc/etcd /var/lib/etcd

sudo chmod 700 /var/lib/etcd

sudo cp ca.pem kubernetes-key.pem kubernetes.pem /etc/etcd/

INTERNAL_IP=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/network-interfaces/0/ip)

ETCD_NAME=$(hostname -s)

cat <<EOF | sudo tee /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/kubernetes.pem \\

--key-file=/etc/etcd/kubernetes-key.pem \\

--peer-cert-file=/etc/etcd/kubernetes.pem \\

--peer-key-file=/etc/etcd/kubernetes-key.pem \\

--trusted-ca-file=/etc/etcd/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster-0 \\

--initial-cluster k8s-controller-0=https://10.240.0.10:2380,k8s-controller-1=https://10.240.0.11:2380,k8s-controller-2=https://10.240.0.12:2380 \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

sudo systemctl daemon-reload

sudo systemctl enable etcd

sudo systemctl start etcdVerification

sudo ETCDCTL_API=3 etcdctl member list \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem8. Bootstrapping the Kubernetes Control Plane

Run following commands on each node of a control plane. Note that the command Default Region API doesn’t work. As a workaround, I used the command described in this GitHub issue

sudo mkdir -p /etc/kubernetes/config

wget -q --show-progress --https-only --timestamping \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-apiserver" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-controller-manager" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-scheduler" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubectl"

chmod +x kube-apiserver kube-controller-manager kube-scheduler kubectl

sudo mv kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

sudo mkdir -p /var/lib/kubernetes/

sudo mv ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

encryption-config.yaml /var/lib/kubernetes/

INTERNAL_IP=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/network-interfaces/0/ip)

REGION=$(curl -s -H "Metadata-Flavor: Google" http://metadata.google.internal/computeMetadata/v1/instance/zone | cut -d/ -f 4 | sed 's/.\{2\}$//')

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $REGION \

--format 'value(address)')

cat <<EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${INTERNAL_IP} \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/var/lib/kubernetes/ca.pem \\

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--etcd-cafile=/var/lib/kubernetes/ca.pem \\

--etcd-certfile=/var/lib/kubernetes/kubernetes.pem \\

--etcd-keyfile=/var/lib/kubernetes/kubernetes-key.pem \\

--etcd-servers=https://10.240.0.10:2379,https://10.240.0.11:2379,https://10.240.0.12:2379 \\

--event-ttl=1h \\

--encryption-provider-config=/var/lib/kubernetes/encryption-config.yaml \\

--kubelet-certificate-authority=/var/lib/kubernetes/ca.pem \\

--kubelet-client-certificate=/var/lib/kubernetes/kubernetes.pem \\

--kubelet-client-key=/var/lib/kubernetes/kubernetes-key.pem \\

--runtime-config='api/all=true' \\

--service-account-key-file=/var/lib/kubernetes/service-account.pem \\

--service-account-signing-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-account-issuer=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \\

--service-cluster-ip-range=10.32.0.0/24 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/var/lib/kubernetes/kubernetes.pem \\

--tls-private-key-file=/var/lib/kubernetes/kubernetes-key.pem \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

sudo mv kube-controller-manager.kubeconfig /var/lib/kubernetes/

cat <<EOF | sudo tee /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--bind-address=0.0.0.0 \\

--cluster-cidr=10.200.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \\

--cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \\

--kubeconfig=/var/lib/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--root-ca-file=/var/lib/kubernetes/ca.pem \\

--service-account-private-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--use-service-account-credentials=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

sudo mv kube-scheduler.kubeconfig /var/lib/kubernetes/

cat <<EOF | sudo tee /etc/kubernetes/config/kube-scheduler.yaml

apiVersion: kubescheduler.config.k8s.io/v1beta1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: "/var/lib/kubernetes/kube-scheduler.kubeconfig"

leaderElection:

leaderElect: true

EOF

cat <<EOF | sudo tee /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--config=/etc/kubernetes/config/kube-scheduler.yaml \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

sudo systemctl daemon-reload

sudo systemctl enable kube-apiserver kube-controller-manager kube-scheduler

sudo systemctl start kube-apiserver kube-controller-manager kube-schedulerEnable HTTP Check

sudo apt-get update

sudo apt-get install -y nginxcat > kubernetes.default.svc.cluster.local <<EOF

server {

listen 80;

server_name kubernetes.default.svc.cluster.local;

location /healthz {

proxy_pass https://127.0.0.1:6443/healthz;

proxy_ssl_trusted_certificate /var/lib/kubernetes/ca.pem;

}

}

EOF

sudo mv kubernetes.default.svc.cluster.local /etc/nginx/sites-available/kubernetes.default.svc.cluster.local

sudo ln -s /etc/nginx/sites-available/kubernetes.default.svc.cluster.local /etc/nginx/sites-enabled/

sudo systemctl restart nginx

sudo systemctl enable nginxVerification

kubectl cluster-info --kubeconfig admin.kubeconfig

curl -H "Host: kubernetes.default.svc.cluster.local" -i http://127.0.0.1/healthzKubernetes Cluster configuration

RBAC

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

EOF

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOFFront Load Balancer

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

gcloud compute http-health-checks create kubernetes \

--description "Kubernetes Health Check" \

--host "kubernetes.default.svc.cluster.local" \

--request-path "/healthz"

gcloud compute firewall-rules create kubernetes-the-hard-way-allow-health-check \

--network kubernetes-the-hard-way \

--source-ranges 209.85.152.0/22,209.85.204.0/22,35.191.0.0/16 \

--allow tcp

gcloud compute target-pools create kubernetes-target-pool \

--http-health-check kubernetes

gcloud compute target-pools add-instances kubernetes-target-pool \

--instances k8s-controller-0,k8s-controller-1,k8s-controller-2

gcloud compute forwarding-rules create kubernetes-forwarding-rule \

--address ${KUBERNETES_PUBLIC_ADDRESS} \

--ports 6443 \

--region $(gcloud config get-value compute/region) \

--target-pool kubernetes-target-poolVerification

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

curl --cacert ca.pem https://${KUBERNETES_PUBLIC_ADDRESS}:6443/version9. Bootstrapping the Kubernetes Worker Nodes

Provision a worker node

sudo apt-get update

sudo apt-get -y install socat conntrack ipset

wget -q --show-progress --https-only --timestamping \

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.21.0/crictl-v1.21.0-linux-amd64.tar.gz \

https://github.com/opencontainers/runc/releases/download/v1.0.0-rc93/runc.amd64 \

https://github.com/containernetworking/plugins/releases/download/v0.9.1/cni-plugins-linux-amd64-v0.9.1.tgz \

https://github.com/containerd/containerd/releases/download/v1.4.4/containerd-1.4.4-linux-amd64.tar.gz \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubelet

sudo mkdir -p \

/etc/cni/net.d \

/opt/cni/bin \

/var/lib/kubelet \

/var/lib/kube-proxy \

/var/lib/kubernetes \

/var/run/kubernetes

mkdir containerd

tar -xvf crictl-v1.21.0-linux-amd64.tar.gz

tar -xvf containerd-1.4.4-linux-amd64.tar.gz -C containerd

sudo tar -xvf cni-plugins-linux-amd64-v0.9.1.tgz -C /opt/cni/bin/

sudo mv runc.amd64 runc

chmod +x crictl kubectl kube-proxy kubelet runc

sudo mv crictl kubectl kube-proxy kubelet runc /usr/local/bin/

sudo mv containerd/bin/* /bin/Configure CNI Networking

- IPAM: IP Address Allocation

- ipMasq: Set up IP masquarade. This is necessary if the host will act as a gateway to subnets that are not able to route to the IP assigned to the container

POD_CIDR=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/attributes/pod-cidr)

cat <<EOF | sudo tee /etc/cni/net.d/10-bridge.conf

{

"cniVersion": "0.4.0",

"name": "bridge",

"type": "bridge",

"bridge": "cnio0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"ranges": [

[{"subnet": "${POD_CIDR}"}]

],

"routes": [{"dst": "0.0.0.0/0"}]

}

}

EOF

cat <<EOF | sudo tee /etc/cni/net.d/99-loopback.conf

{

"cniVersion": "0.4.0",

"name": "lo",

"type": "loopback"

}

EOFContainerd

sudo mkdir -p /etc/containerd/

cat << EOF | sudo tee /etc/containerd/config.toml

[plugins]

[plugins.cri.containerd]

snapshotter = "overlayfs"

[plugins.cri.containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runc"

runtime_root = ""

EOF

cat <<EOF | sudo tee /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=/sbin/modprobe overlay

ExecStart=/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOFConfigure Kubelet

sudo mv ${HOSTNAME}-key.pem ${HOSTNAME}.pem /var/lib/kubelet/

sudo mv ${HOSTNAME}.kubeconfig /var/lib/kubelet/kubeconfig

sudo mv ca.pem /var/lib/kubernetes/

cat <<EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

podCIDR: "${POD_CIDR}"

resolvConf: "/run/systemd/resolve/resolv.conf"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/${HOSTNAME}.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/${HOSTNAME}-key.pem"

EOF

cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFConfigure the Kube Proxy

sudo mv kube-proxy.kubeconfig /var/lib/kube-proxy/kubeconfig

cat <<EOF | sudo tee /var/lib/kube-proxy/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/var/lib/kube-proxy/kubeconfig"

mode: "iptables"

clusterCIDR: "10.200.0.0/16"

EOF

cat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/var/lib/kube-proxy/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFStart daemons

sudo systemctl daemon-reload

sudo systemctl enable containerd kubelet kube-proxy

sudo systemctl start containerd kubelet kube-proxyConfirmation

$ kubectl get nodes --kubeconfig admin.kubeconfig10. Configuring kubectl for Remote Access

kubectl

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem

kubectl config set-context kubernetes-the-hard-way \

--cluster=kubernetes-the-hard-way \

--user=admin

kubectl config use-context kubernetes-the-hard-way11. Provisioning Pod Network Routes

for instance in k8s-worker-0 k8s-worker-1 k8s-worker-2; do

gcloud compute instances describe ${instance} \

--format 'value[separator=" "](networkInterfaces[0].networkIP,metadata.items[0].value)'

donefor i in 0 1 2; do

gcloud compute routes create kubernetes-route-10-200-${i}-0-24 \

--network kubernetes-the-hard-way \

--next-hop-address 10.240.0.2${i} \

--destination-range 10.200.${i}.0/24

doneConfirmation.

gcloud compute routes list --filter "network: kubernetes-the-hard-way"12. Deploying the DNS Cluster Add-on

There is no core dns 1.8 file anymore. So, following this GitHub comment, I downloaded CoreDNS 1.7 committed in the repository originally.

kubectl apply -f https://raw.githubusercontent.com/kelseyhightower/kubernetes-the-hard-way/master/deployments/coredns-1.7.0.yamlConfirm application

bash-5.2$ kubectl get pods -l k8s-app=kube-dns -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-748f75f5fb-wqqcb 1/1 Running 0 13s

coredns-748f75f5fb-wqr9m 1/1 Running 0 13sVerification

kubectl get pods -l run=busybox

POD_NAME=$(kubectl get pods -l run=busybox -o jsonpath="{.items[0].metadata.name}")

kubectl exec -ti $POD_NAME -- nslookup kubernetesOutput was

Server: 10.32.0.10

Address 1: 10.32.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.32.0.1 kubernetes.default.svc.cluster.localThese IPs came from services

bash-5.2$ kubectl get svc -n kube-system -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kube-dns ClusterIP 10.32.0.10 <none> 53/UDP,53/TCP,9153/TCP 9m32s k8s-app=kube-dnsbash-5.2$ kubectl get svc -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 14d <none>

bash-5.2$ kubectl get svc -o yaml kubernetes

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2023-07-16T04:03:32Z"

labels:

component: apiserver

provider: kubernetes

name: kubernetes

namespace: default

resourceVersion: "208"

uid: a0b09bfc-5464-4ddc-ab21-3787ac9dec44

spec:

clusterIP: 10.32.0.1

clusterIPs:

- 10.32.0.1

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: https

port: 443

protocol: TCP

targetPort: 6443

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}I couldn’t find where this service is defined. Maybe kube-proxy or kubelet configurations included it.

13. Smoke Test

Secret

kubectl create secret generic kubernetes-the-hard-way \

--from-literal="mykey=mydata"

gcloud compute ssh k8s-controller-0 \

--command "sudo ETCDCTL_API=3 etcdctl get \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem\

/registry/secrets/default/kubernetes-the-hard-way | hexdump -C"etcd

Just looking around etcd data.

- Checking keys stored in etcd following this comment

$ sudo ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/etcd/ca.pem --cert=/etc/etcd/kubernetes.pem --key=/etc/etcd/kubernetes-key.pem get / --prefix --keys-only | head -n 5

/registry/apiregistration.k8s.io/apiservices/v1.

/registry/apiregistration.k8s.io/apiservices/v1.admissionregistration.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.apiextensions.k8s.ioGet a revision of a key

$ sudo ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/etcd/ca.pem --cert=/etc/etcd/kubernetes.pem --key=/etc/etcd/kubernetes-key.pem get /registry/deployments/kube-system/coredns -w json | jq .

{

"header": {

"cluster_id": 4502693191618639400,

"member_id": 17982242243123227000,

"revision": 2046681,

"raft_term": 123

},

"kvs": [

{

"key": "L3JlZ2lzdHJ5L2RlcGxveW1lbnRzL2t1YmUtc3lzdGVtL2NvcmVkbnM=",

"create_revision": 2041628,

"mod_revision": 2041695,

"version": 6,

"value": "<BASE 64 ENCODED>"

}

],

"count": 1

}Deployment

kubectl create deployment nginx --image=nginx

POD_NAME=$(kubectl get pods -l app=nginx -o jsonpath="{.items[0].metadata.name}")Port forwarding

$ kubectl port-forward $POD_NAME 8080:80

$ curl --head http://127.0.0.1:8080

HTTP/1.1 200 OK

Server: nginx/1.25.1

Date: Sun, 30 Jul 2023 23:38:57 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

Connection: keep-alive

ETag: "6488865a-267"

Accept-Ranges: bytesLogs

$ kubectl logs $POD_NAME

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2023/07/30 23:36:31 [notice] 1#1: using the "epoll" event method

2023/07/30 23:36:31 [notice] 1#1: nginx/1.25.1

2023/07/30 23:36:31 [notice] 1#1: built by gcc 12.2.0 (Debian 12.2.0-14)

2023/07/30 23:36:31 [notice] 1#1: OS: Linux 5.15.0-1036-gcp

2023/07/30 23:36:31 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2023/07/30 23:36:31 [notice] 1#1: start worker processes

2023/07/30 23:36:31 [notice] 1#1: start worker process 28

2023/07/30 23:36:31 [notice] 1#1: start worker process 29

127.0.0.1 - - [30/Jul/2023:23:38:47 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.88.1" "-"

127.0.0.1 - - [30/Jul/2023:23:38:50 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.88.1" "-"

127.0.0.1 - - [30/Jul/2023:23:38:57 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.88.1" "-"Exec

$ kubectl exec -ti $POD_NAME -- nginx -v

nginx version: nginx/1.25.1Services

kubectl expose deployment nginx --port 80 --type NodePort

NODE_PORT=$(kubectl get svc nginx \

--output=jsonpath='{range .spec.ports[0]}{.nodePort}')

gcloud compute firewall-rules create kubernetes-the-hard-way-allow-nginx-service \

--allow=tcp:${NODE_PORT} \

--network kubernetes-the-hard-wayConfirmation

EXTERNAL_IP=$(gcloud compute instances describe k8s-worker-0 \

--format 'value(networkInterfaces[0].accessConfigs[0].natIP)')

curl -I http://${EXTERNAL_IP}:${NODE_PORT}14. Cleaning Up

Following steps haven’t been tested yet.

gcloud -q compute instances delete \

k8s-controller-0 k8s-controller-1 k8s-controller-2 \

k8s-worker-0 k8s-worker-1 k8s-worker-2 \

--zone $(gcloud config get-value compute/zone)

gcloud -q compute forwarding-rules delete kubernetes-forwarding-rule \

--region $(gcloud config get-value compute/region)

gcloud -q compute target-pools delete kubernetes-target-pool

gcloud -q compute http-health-checks delete kubernetes

gcloud -q compute addresses delete kubernetes-the-hard-way

gcloud -q compute firewall-rules delete \

kubernetes-the-hard-way-allow-nginx-service \

kubernetes-the-hard-way-allow-internal \

kubernetes-the-hard-way-allow-external \

kubernetes-the-hard-way-allow-health-check

gcloud -q compute routes delete \

kubernetes-route-10-200-0-0-24 \

kubernetes-route-10-200-1-0-24 \

kubernetes-route-10-200-2-0-24

gcloud -q compute networks subnets delete kubernetes

gcloud -q compute networks delete kubernetes-the-hard-way

gcloud -q compute addresses delete kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region)